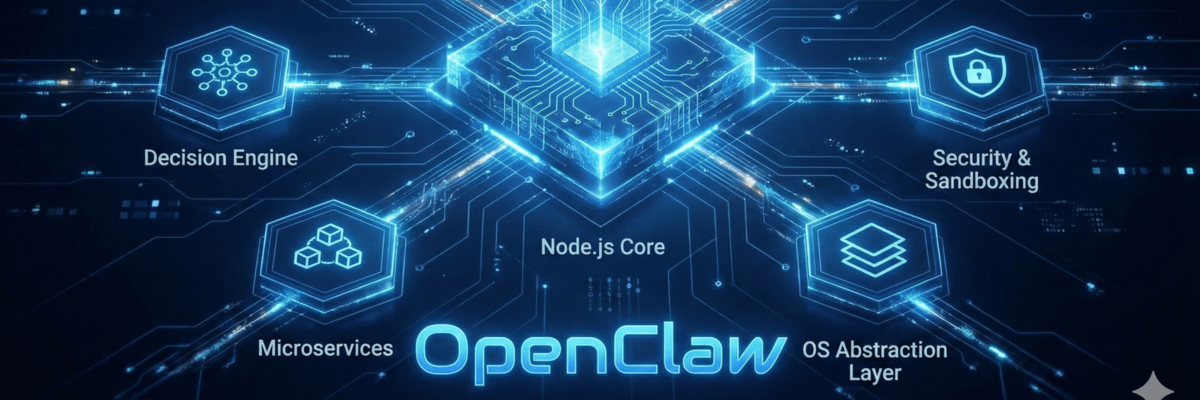

1. the architecture of the "Agentic OS" (Node.js Core)

Technically, OpenClaw is based on an event-driven microservice architecture within a Node.js instance.

- The orchestrator (state machine): OpenClaw does not use a simple linear query. It implements a so-called ReAct-Loop (Reasoning + Acting). When a message is received, it is transferred to a thought process. The orchestrator manages a state machine, which decides whether the LLM requires further information (Observation) or needs to call up a tool (Action).

- Model agnostics via Unified API: Internally, OpenClaw often uses libraries such as Vercel AI SDK or LangChain to place an abstraction layer over various LLM providers. In technical terms, this means that the payload for a prompt is standardized. The core does not care whether a gpt-4o, claude-3-5-sonnet or a local Llama 3 via Ollama is behind it. It transforms the specific API responses into a standardized internal JSON format.

- Messaging bridge (webhook architecture): The connection to WhatsApp/Telegram is usually made via headless browser automation (such as whatsapp-web.js) or official webhooks. The highlight: The messenger serves as standard input/output (STDIN/STDOUT). Incoming messages are emitted as events, processed by the agent and the response is returned to the messenger service as an asynchronous callback.

2. the execution layer & MCP (Model Context Protocol)

This is the most technically complex part. OpenClaw uses the MCP introduced by Anthropic.

- MCP clients & servers: OpenClaw acts as an MCP client. It can connect to any number of local or remote MCP servers. An MCP server is a separate process that provides a standardized JSON-RPC interface.

- Tool injection: When sending the prompt to the LLM, OpenClaw "injects" the definitions of all available tools (e.g. read_file, execute_shell, Google Search) into the system prompt. The LLM does not respond with text, but with a structured tool call (e.g. { "call": "execute_shell", "command": "ls -la" }).

- Sandboxing vs. native execution: While cloud AIs run in Docker containers, OpenClaw often executes commands natively in the shell of the host system (depending on the configuration). Technically, this is realized via the Node.js child_process library. This allows full control over the OS, but requires security mechanisms such as "human-in-the-loop" confirmations for critical commands.

3. persistent storage: the Markdown vector database hybrid logic

Why Markdown (SOUL.md, MEMORY.md) instead of an SQL database?

- Context Window Management: LLMs have a limited context window. OpenClaw uses a technique called RAG (Retrieval-Augmented Generation), but at the file level.

- Technical process: Before a prompt is sent, a script reads the Markdown files. Important passages are often loaded into a temporary vector index using embedding (vectorization of the text). Only the "snippets" from the MEMORY.md that are relevant to the current task are copied to the prompt.

- Self-updating files: After completing a task, the agent generates a summary. A tool call actively writes this back into the Markdown file. Technically, this is an fs.writeFile process that is controlled by the LLM to serialize its own "state" for the next session.

4. proactive communication (The Heartbeat Mechanism)

Technically speaking, a classic chatbot is a request-response system (like HTTP). OpenClaw breaks this down:

- Cron jobs & event loop: OpenClaw sets node cron jobs or setTimeout instances in the background.

- Autonomous triggers: An agent can set itself a "wake-up call". Example: "Check in 2 hours whether share X has fallen." Technically, the agent registers a task in an internal queue. As soon as the timer expires, a new thought loop is initiated - without any user input. The result is then pushed asynchronously to the user via the messaging bridge.

5. vibe coding & local-first

- Vibe coding (technical): This means that OpenClaw's code is often so modular that AI editors (such as Cursor or Windsurf) can extend functions extremely quickly. The architecture relies on functional programming and clear interfaces, which allows the AI to write its own "healing scripts" or generate new tools at runtime.

- Local-First: Latency is minimal as there are no heavy backend servers in between. Data sovereignty lies with the user, as the node_modules folder and the .md files are physically located on the hard disk.

To summarize:

OpenClaw is technically a local runtime node that wires LLM intelligence to the operating system via JSON-RPC (MCP) and makes it controllable via asynchronous messaging events.